2025 has been challenging due to a third year of recession, yet promising in terms of future opportunities. Fortunately, I found the time to implement several private projects using AI to build little helpers, tools, and games. Some of them made it into this blog, others did not (see below). Furthermore, I had the honor to speak at my home university, TU Dortmund, about GenAI as a tool to improve software and software engineering. This will lead to a full course in 2026, which I will teach together with Prof. Falk Howar. I’m looking forward to that.

Starting in August 2025 (when I wrote my last post), a lot changed for me at work, which makes me look forward optimistically. I pitched internally at my company with a set of architectural principles to enhance enterprise applications with AI. We already did lots of such projects, and these principles combine what we have learned so far. I named this new class of information systems: AI Supercharged Enterprise Applications.

For this new approach, I built a complete application (with AI) that showcases an example architecture (following the principles) as well as use cases for it. This setup offers a variety of new possibilities regarding user experience, automation, degrees of freedom for the user, very efficient modernization paths, and many more. The pitch and demos were very well received, and I got the mandate to further scale up the delivery for building such systems for our customers. I wrote a blog post that will be published on January 5 on the adesso blog, which I will repost here. It gives a glimpse of our forecast regarding the direction of future software systems. More publications are planned for 2026.

But this was not the only change… Shortly after I got this new duty — and first and foremost this new opportunity — I was asked to scale deliveries for Conversational AI as well as the use of GenAI in the Software Development Lifecycle (SDLC), in order to make it better and more efficient and not buggier and harder to understand 🤓 — a risk that should not be underestimated.

As mentioned above, I did some AI-assisted projects in 2025 that I have not described on this blog (if there is interest, please write it in the comments):

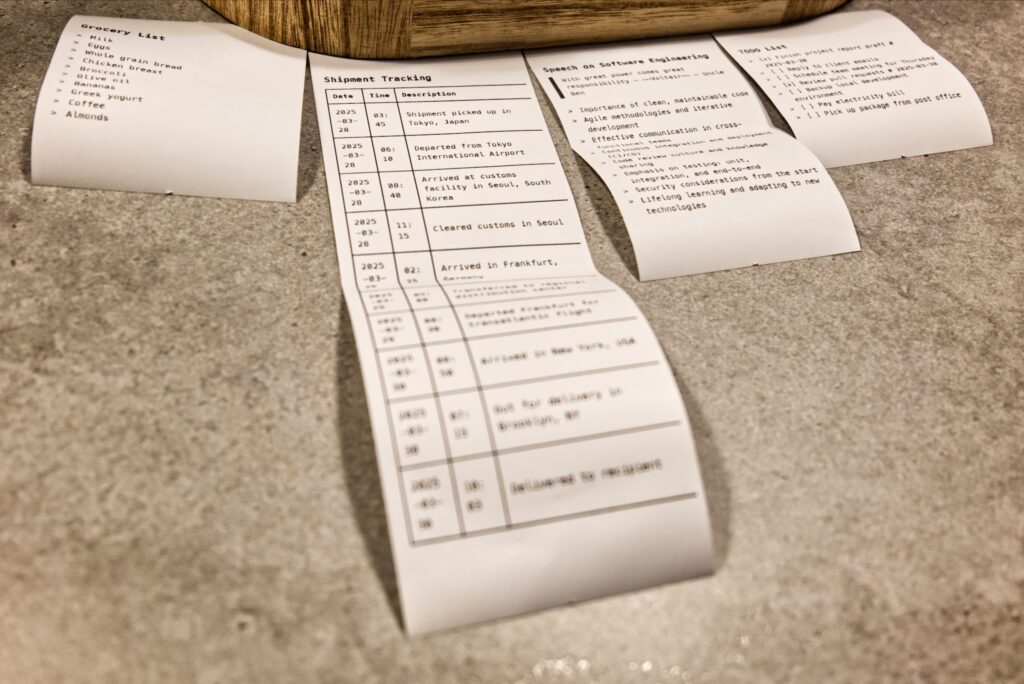

- Cashier Play (Dec. 2024 – Jan. 2025): A digital cash register that was quite challenging and revealed some limitations of GPT-4o and o1—for example, in writing runtime optimizations—solved by later models.

- Home Assistant automation for charging an electric car with surplus energy from a solar power system

- Home Assistant automation for spooky Halloween lighting

- Home Assistant automation for a sophisticated alarm system

- A virtual classroom that allows you to upload exercise sheets as a “teacher”, download, solve, and reupload them as a “pupil”, and proofread and rate them with stars as a teacher

- Many little bash scripts for things like preparing large interview files for transcription with

gpt4o-transcribe.

So I’m very happy to move forward with these topics at adesso in 2026 🥳. I wanted to share this with you because it gives me the opportunity to build on my background in Model-Driven Software Engineering (MDSE) and push AI in several flavors.

Exciting 🤓.